Methods pod_not_started, pod_is_running, process_status, and _task_status are removed. Method monitor_pod is removed and split into methods follow_container_logs, await_container_completion, await_pod_completion Method start_pod is removed and split into two methods: create_pod and await_pod_start.

In class PodManager (formerly PodLauncher): Again, see the calls to methods await_pod_start, follow_container_logs, await_container_completion and await_pod_completion. The monitoring etc is now orchestrated directly from execute. Now this logic is in part handled by get_or_create_pod, where a new pod will be created if necessary. Previously it would mutate the labels on self.pod, launch the pod, monitor the pod to completion etc. Method create_new_pod_for_operator is removed. Method _get_pod_identifying_label_string is renamed _build_find_pod_label_selector We also add parameter include_try_number to control the inclusion of this label instead of possibly filtering it out later. This method doesn’t return all labels, but only those specific to the TI. Method create_labels_for_pod is renamed _get_ti_pod_labels. It now takes argument context in order to add TI-specific pod labels previously they were added after return. Method create_pod_request_obj is renamed build_pod_request_obj. See methods await_pod_start, follow_container_logs, await_container_completion and await_pod_completion.

With this change the pod monitoring (and log following) is orchestrated directly from execute and it is the same whether it’s a “found” pod or a “new” pod. Previously it monitored a “found” pod until completion. after an airflow worker failure) is moved out of execute and into method find_pod. Logic to search for an existing pod (e.g. Method create_pod_launcher is converted to cached property pod_managerĬonstruction of k8s CoreV1Api client is now encapsulated within cached property client Details on method renames, refactors, and deletions (if configured to delete the pod) and push XCom (if configured to push XCom). If the pod terminates successfully, we delete the pod KubernetesPodOperator.process_pod_deletion> We raise an AirflowException to fail the task instance. To delete its pod, we delete it KubernetesPodOperator.process_pod_deletion>. Indicating that the pod failed and should not be “reattached to” in a retry. IfĪdditionally the task is configured not to delete the pod after termination, we apply a label KubernetesPodOperator.patch_already_checked> If the pod terminates unsuccessfully, we attempt to log the pod events PodLauncher.read_pod_events>.

after a worker failure) orĬreated anew, the waiting logic may have occurred in either handle_pod_overlap or create_new_pod_for_operator.Īfter the pod terminates, we execute different cleanup tasks depending on whether the pod terminated successfully. Previously, depending on whether the pod was “reattached to” (e.g. Value from the base container, we await pod completion. Logs, the operator will instead KubernetesPodOperator.await_container_completionĮither way, we must await container completion before harvesting xcom. The operator will follow the base container logs and forward these logs to the task logger until Pending phase ( ~.KubernetesPodOperator.await_pod_start). The first step is to wait for the pod to leave the The “waiting” part of execution has three components. The package supports the following python versions: 3.7,3.8,3.9,3.10 Requirements

#AIRFLOW XCOM DELETE INSTALL#

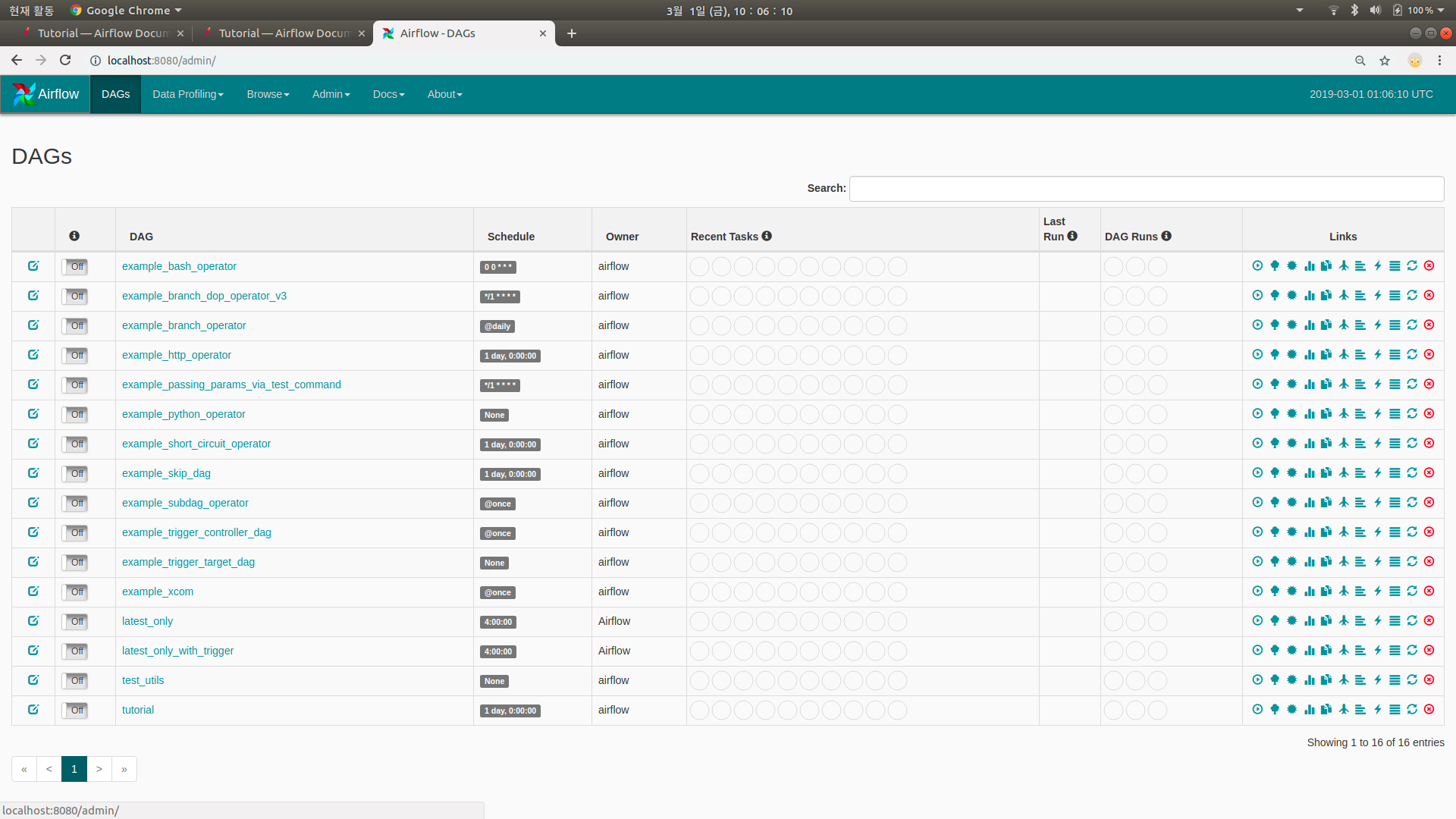

Pip install apache-airflow-providers-cncf-kubernetes You can install this package on top of an existing Airflow 2 installation (see Requirements belowįor the minimum Airflow version supported) via You can find package information and changelog for the provider All classes for this provider packageĪre in python package. This is a provider package for cncf.kubernetes provider. Thanks in advance for adding into my knowledge.Package apache-airflow-providers-cncf-kubernetes Error imageįollowing is my DAG code: def defaultconverter(o):ĭef get_max_created_timestamp(sql_table_name):Ĭheck_column = f"select column_name from INFORMATION_SCHEMA.COLUMNS where table_name = 'įile_format = (TYPE = CSV, COMPRESSION = NONE, NULL_IF=(''), field_optionally_enclosed_by='"' ) While snowflake accepts the single qoutes in its sql query. The issue is airflow xcom is returning in double quotes when I pull value inside sql template. I am getting max created values using python operator from mysql table and on base of the xcom of that operator I am create a csv file of snowflake data to dump only latest created at data from mysql to snowflake. I am new to ETL and working on airflow and snowflake.

0 kommentar(er)

0 kommentar(er)